I think the word "feminism" is very mis-leading.

I get it's to give both men and women equal rights since unfortunately, there are sexist men out there who see women as nothing but sex objects and kitchen wives (and I've unfortunately dealt with friends like that), but just the way it's worded sounds more like women trying to be more dominant over men.

It also doesn't help that there are, unfortunately, "feminists" out there who take it way too far (and don't even get me started with those annoying "feminists" who claim they want "equal rights" but then use their sex as an easy copout to get out of a situation).

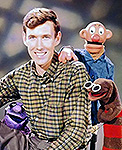

Welcome to the Muppet Central Forum!

Welcome to the Muppet Central Forum! The Muppet Show

The Muppet Show Sesame Street Classics on YouTube

Sesame Street Classics on YouTube Sesame Street debuts on Netflix

Sesame Street debuts on Netflix Back to the Rock Season 2

Back to the Rock Season 2 Sam and Friends Book

Sam and Friends Book Jim Henson Idea Man

Jim Henson Idea Man Bear arrives on Disney+

Bear arrives on Disney+